Stop guessing what your AI agents can do.

FRAME gives you infrastructure for AI adoption. Know exactly what each agent is allowed to do, who's accountable when things break, and how to fix it before regulators ask questions.

You're probably losing track right now.

Someone spins up a Claude project. Works great. Then another team member tries ChatGPT for the same task. Both agents now have access to customer data. Nobody documented which one is allowed to make decisions. Credentials get shared. Prompts drift. Then someone asks: "Which AI agent sent that email to the client?"

... Silence

This isn't because your team is careless. It's because AI tools feel capable and sound confident. So we skip the design work we'd normally do for any system that touches customer data or makes decisions.

You need infrastructure before you need permission.

FRAME is infrastructure,

not governance theater.

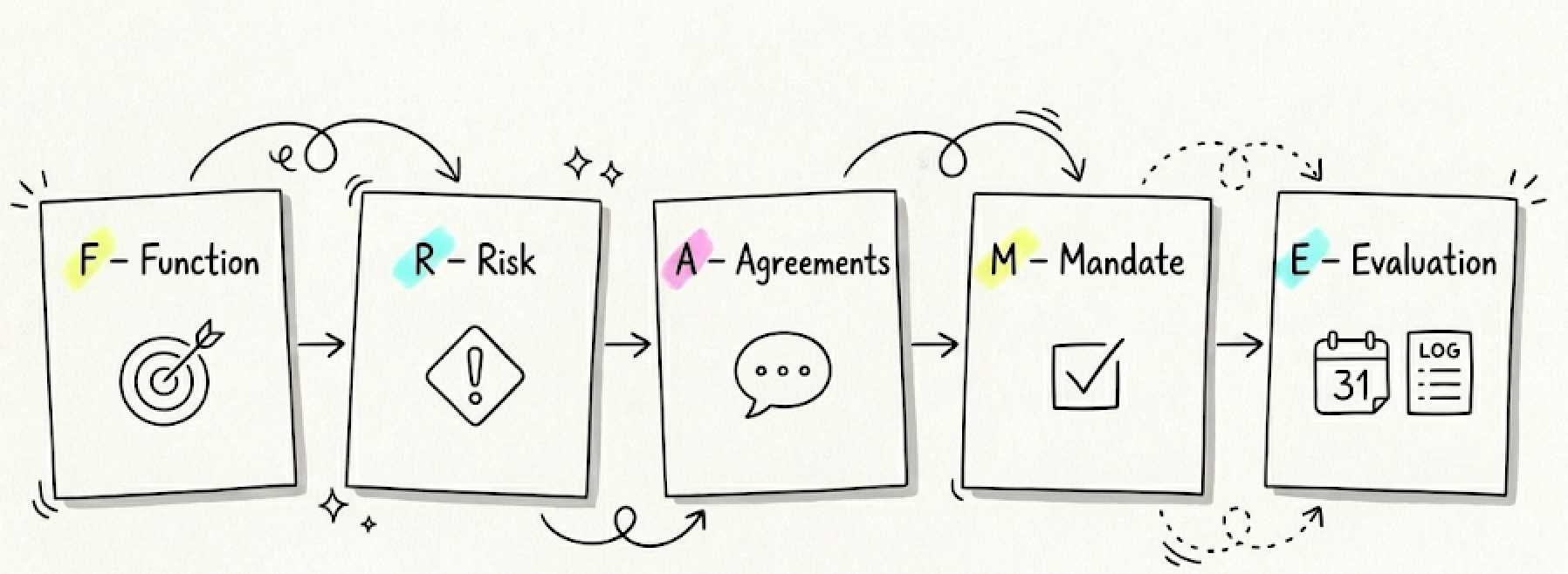

It's five questions you answer before deploying any AI agent...

Takes 20 minutes. Prevents months of cleanup.

Think of it like this: you wouldn't let a new team member access your production database without defining their role, permissions, and escalation path. AI agents deserve the same rigor.

What FRAME gives you:

FRAME gives AI the context it needs to be genuinely helpful.

- Ownership

- Clear ownership for every AI agent's actions

- Built-in human oversight

- EU AI Act calls this "meaningful human control"

- Clarity

- Documented permissions your auditors can actually read.

- A Plan

- A decision trail when things go wrong.

Five questions. Twenty minutes. Done.

For each AI agent you deploy, answer these:

Function

What job is this agent actually doing?

Not "Helping with marketing".. That's too vague.

Specific output: "Generates first draft of weekly newsletter from bullet points I provide."

Document: Input format, expected output, what success looks like.

Risk

What breaks if this agent screws up?

Be honest. Customer trust? Revenue? Compliance? Or just... you look silly in a Slack thread?

Document: Worst-case scenario, who gets hurt, what data touches, regulatory implications.

Agreements

What can't this agent do without asking?

Define the boundaries. "Agent can draft emails but can't send them." "Agent can analyze data but can't store it." "Agent can suggest decisions but can't execute them."

Document: Hard limits, required human checkpoints, escalation triggers.

Mandate

Who owns this agent's decisions?

One person. Not "the team" or "whoever's using it." One name. That person answers when the agent makes a mistake.

Document: Decision owner, backup owner, who they report to.

Evaluation

How do we know this is working?

Not quarterly reviews. Lightweight checks."Every Friday, spot-check three outputs." "Monthly audit of what data was accessed."

Document: Check frequency, what you're checking, who's checking it.

What you get.

- Begin small, begin conscious

- Start with one agent. Document it properly. Add more as you build confidence. FRAME scales from "one person testing Claude" to "twenty agents across five teams."

- Regulatory alignment without the theater

- The EU AI Act requires "meaningful human control" for high-risk AI. FRAME's Mandate and Evaluation components handle this. You're not doing compliance theater - you're building infrastructure that happens to satisfy regulators.

- Fix accountability gaps

- When something breaks, you know: which agent did it, who owns the decision, what the boundaries were, why it happened. No more "I think maybe someone's ChatGPT did that?"

- Prevent shadow AI

- Once you have FRAME documentation, teams can't quietly spin up new agents without answering the five questions. Makes the invisible visible.

| WITHOUT FRAME | WITH FRAME |

|---|---|

"I think Sarah's using ChatGPT for that?" | Clear owner documented for each agent |

Shared credentials nobody tracks | Defined permissions and access controls |

"Which AI sent that client email?" | Decision trail for every agent action |

Quarterly panic about compliance | Built-in regulatory alignment |

Shadow AI tools multiplying | Visible infrastructure for all agents |

Data leaking between tools | Documented boundaries and escalation paths |

Start today.

Pick one AI agent your team already uses. Could be a Claude project, a ChatGPT workflow, a GitHub Copilot setup. Doesn't matter. Spend 20 minutes answering the five FRAME questions. Write it down. Share it with your team.

Now you have infrastructure. Next time someone wants to add an AI agent, they fill out FRAME first. Suddenly you have governance without slowing anyone down.

WHAT YOU NEED

- The FRAME worksheet (I'll send it)

- 20 minutes

- One AI agent to start with

- Willingness to write things down

WHAT YOU DON'T NEED

- Enterprise AI strategy document

- Consulting engagement

- Committee approval

- Six months of planning

This is practical infrastructure, not consultant theory.

Get the FRAME worksheet.

I'll send you the access to all the files related! So you are ready to start documenting your first AI agent today.

I send you the FRAME files (and a subscription to my newsletter)

No spam. No upsells. I promise I only send you things I'd want read to and use myself.